Common Characteristics in a Big Data Project – The Data Engineer

Availability, fragmentation, and heterogenous data

The first point to cover in any big data project is to review our data sources; whether they are internal or from external providers, it is important to know the nature, granularity, and volume of the data. At this point in the process, the Data Engineer is the one who should ask the appropriate questions that consolidate the hypothesis that sustains the project in order to identify what data we need and how we need it.

Unified Data Model

Data is a minimal unit of information, and, through its analysis, we can extract information relevant for decision making. We should consider two aspects to be able to model the data in a structured way:

· Qualitative or quantitative analysis generated by human interaction, whether it is during data registration or validation. This aspect implies a data source with incorrect values or unrelated derivatives of its own nature that hinder subsequent treatment.

· Lack of universal criteria to align the granularity of the data since the information can be represented in many ways and not have a single or universal criterion that disperses the information.

At this point, we again turn to the Data Engineer, who is responsible for bringing order to the chaos of data, to unify, categorize, and prepare it so that Artificial Intelligence Algorithms can handle it.

Functions of the Data Engineer

The capture of large volumes of data, both internal and external, and their processing to unify and debug them is the backbone of any big data project. This process occupies a large amount of the time dedicated to the project and is fundamental in guaranteeing its success. We will highlight the following functions of a Data Engineer:

· Guarantee the quality of the extracted conclusions given the mutability of the data at the source.

· Constant data provisioning to/from the Data Lake through production process development.

· Design and development of data processing software, as well as evolutionary and/or corrective.

· Design and implementation of APIs that allow for making the most of insights obtained after data processing.

See more articles related to Blog

Created on: 25/02/2025

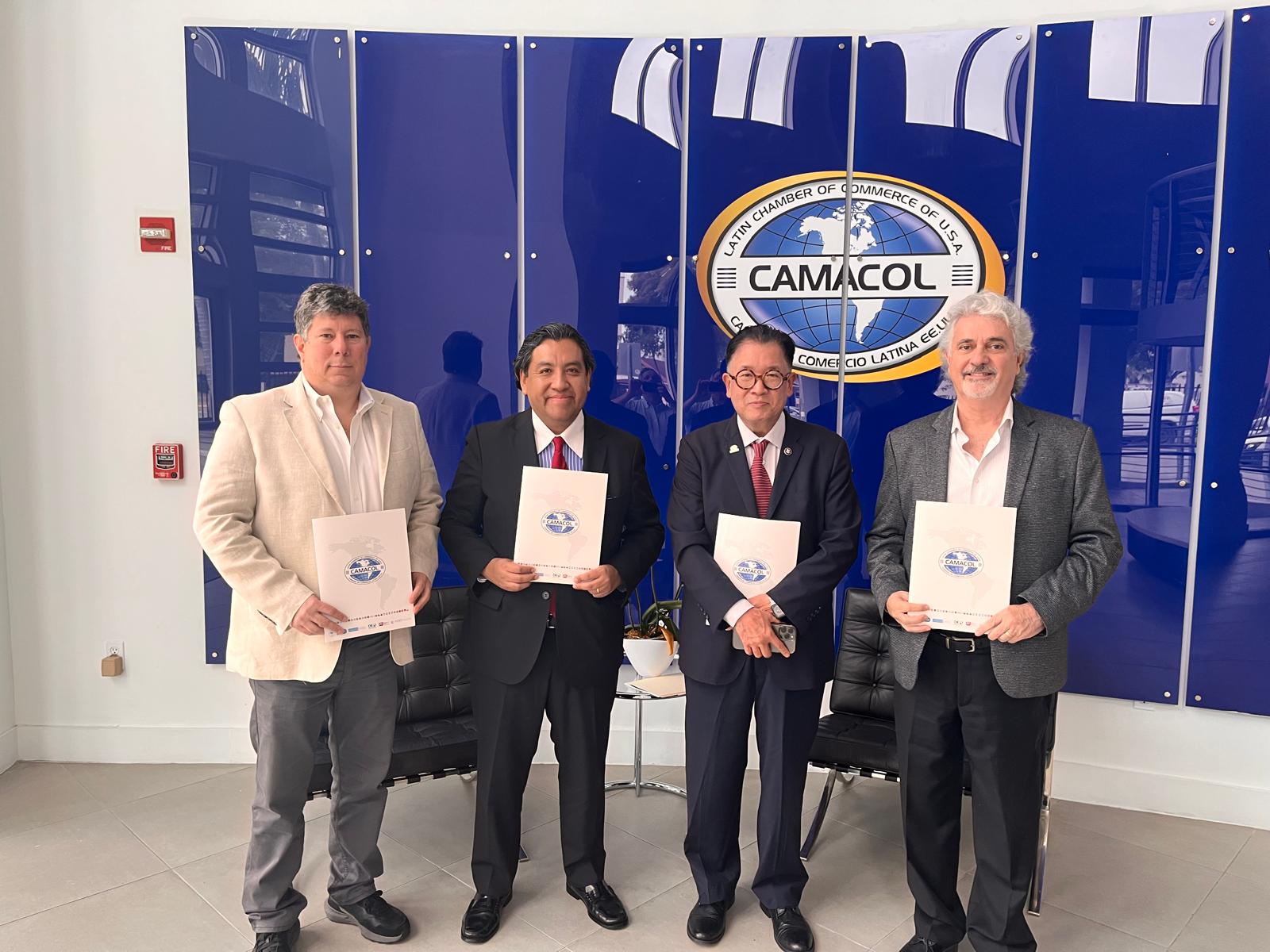

MIU City University Miami and CAMACOL Sign an Agreement to Promote Education and Business Development

Miami, January 16, 2025 MIU City University Miami and The Latin Chamber of Commerce of the United States (CAMACOL) have […]

Blog

Created on: 18/03/2025

UNIR Innovation Day Miami: Chema Alonso and Iker Casillas Lead the Charge for Cibersecurity and AI Education

Chema Alonso and Iker Casillas advocate for quality education to tackle the challenges of Cybersecurity and AI at UNIR Innovation […]

Blog

Created on: 18/03/2025

Commencement MIU City University Miami 2023

Hundreds of MIU students celebrated the culmination of their university studies in an emotional commencement ceremony. A total of 358 […]

Blog